Are We Burning the Planet for Developer Convenience?

In my last post 👇, "The Fall of the GPU Accidental Empire," I presented that GPUs didn’t win AI because they were the right architecture. They won because they were the only hardware available. They were an accidental empire, convenient, not correct. That was the hardware reality check. But hardware is only half the story.

https://www.linkedin.com/pulse/fall-gpu-accidental-empire-kumar-sreekanti-xukyc/

If GPUs are the accidental empire, then CUDA is the fortress wall that keeps us locked inside. We are currently building the future of AI on a software stack designed for prototyping, not production. And just like enterprise software in the late 90s, we are about to pay the price.

The "Java" Nightmare

If you were coding in the late 90s, you remember the nightmare. You remember the spinning coffee cup. You remember the sluggish interfaces. You remember the "Garbage Collection" pauses that froze your entire application while the system tried to clean up its own mess. Java promised us "Write Once, Run Anywhere." In reality, it was bloated, heavy, and notoriously inefficient. But it won anyway. Why? Because it let us be lazy.

It allowed us to ship code faster because developer productivity mattered more than hardware efficiency. We didn't care about the performance hit because Moore’s Law was there to bail us out. We let the hardware pay the tax for our sloppy software.

Today, AI software is repeating the same mistake. But this time, Moore's Law isn't coming to save us.

The "Graphics" Hack

Let’s be honest about what a GPU actually is. It originated as a chip designed to draw triangles. It is built for vertex shading, pixel processing, and texture mapping. When we use CUDA to run AI, we are essentially performing a massive hack. We are tricking a chip designed for video games into performing linear algebra. CUDA was the "Java" of this era. It provided a brilliant abstraction layer that hid the ugly truth. It allowed us to pretend that these gaming chips were math coprocessors. The CUDA-based frameworks masked the bandwidth ceilings and divergence. At small scales, the hack worked.

But we aren't at small scales anymore.

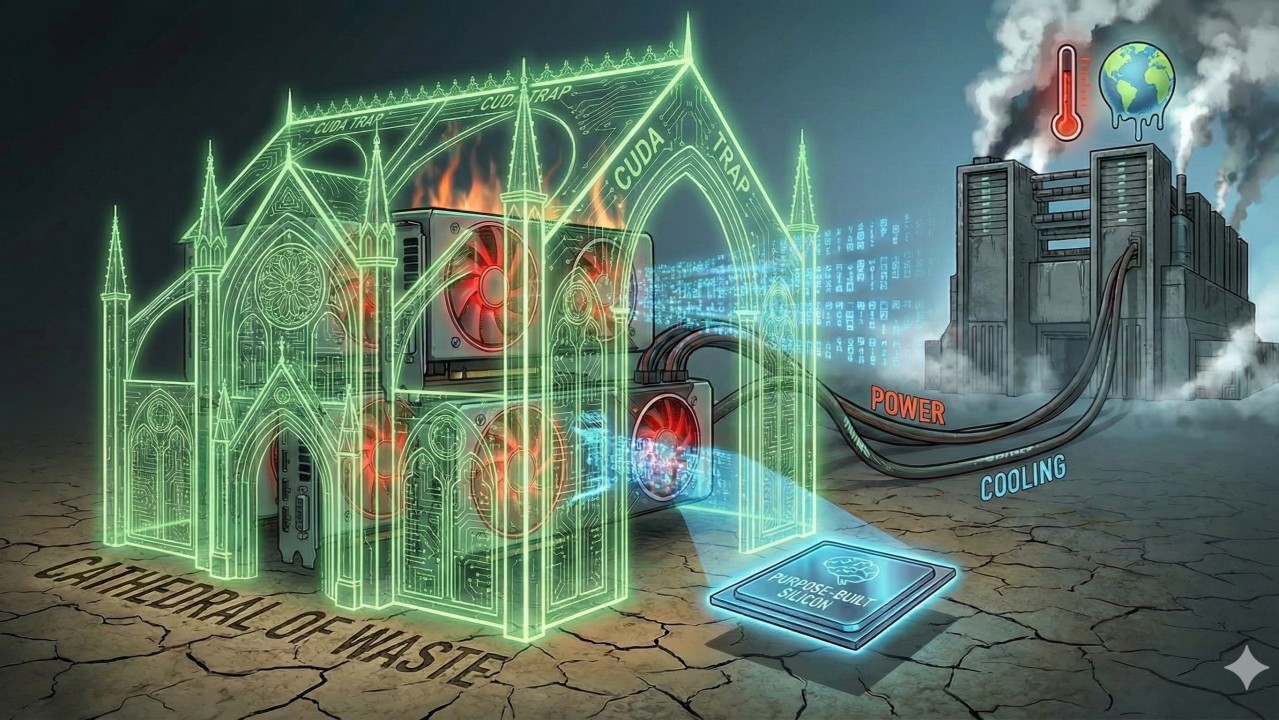

The "Cathedral of Waste"

We got addicted to the convenience. Frameworks like PyTorch and TensorFlow made it so easy to default to CUDA that we stopped asking if it made sense. We built an entire ecosystem, a "Cathedral of infrastructure," on top of a foundation that was never designed for this workload. We traded architectural fit for "ecosystem momentum". And now, the bill has arrived.

The Nuclear Option

In the 90s, the "Java Tax" just meant your app was slow. In 2025, the "CUDA Tax" means we are pushing power grids and cooling systems to their limits. Because we insist on using general-purpose gaming chips for AI inference, we are hitting physical walls that software can't abstract away.

We are seeing: Escalating power density that traditional air cooling can’t handle. Data centers turning into industrial plants, requiring liquid cooling infrastructure that rivals nuclear reactors. Massive energy waste moving data back and forth across a chip architecture designed for graphics rendering, not matrix flow.

We are building infrastructure that looks like a sci-fi dystopia, not because the math requires it, but because we are too afraid to leave the "safety" of CUDA.

Conclusion: Wake Up

The "Java Era" of AI is over. We can no longer afford to treat hardware as an infinite resource to be wasted by convenient software. The "CUDA Trap" is the belief that because we used the raft to cross the river, we must carry it on our backs forever. We are optimizing for a world that no longer exists.The model code doesn’t need to change; PyTorch can target new engines. But the engine must. We have to stop forcing graphics cards to think and start moving to purpose-built silicon.

The convenient choice is no longer the safe choice. It is a roadmap to climate disaster.